A staging area or landing zone, is an intermediate storage area used for data processing during the extract, transform and load (ETL) process. The data staging area sits between the data source(s) and the data target(s), which are often data warehouses, data marts, or other data repositories.”We have a simple data warehouse that takes data from a few RDBMS source systems and load the data in dimension and fact tables of the warehouse”. Staging area is a place where you hold temporary tables on data warehouse server. Staging tables are connected to work area or fact tables. We basically need staging area to hold the data, and perform data cleansing and merging, before loading the data into warehouse.

If target and source databases are different and target table volume is high it contains some millions of records in this scenario without staging table we need to design your informatica using look up to find out whether the record exists or not in the target table since target has huge volumes so its costly to create cache it will hit the performance. If we create staging tables in the target database we can simply do outer join in the source qualifier to determine insert/update this approach will give you good performance. It will avoid full table scan to determine insert/updates on target.And also we can create index on staging tables since these tables were designed for specific application it will not impact to any other schemas/users. While processing flat files to data warehousing we can perform cleansing. Data cleansing, also known as data scrubbing, is the process of ensuring that a set of data is correct and accurate. During data cleansing, records are checked for accuracy and consistency.

- Since it is one-to-one mapping from ODS (Operational Data Sources) to staging we do truncate and reload.

- We can create indexes in the staging state, to perform our source qualifier best.

- If we have the staging area no need to relay on the informatics transformation to known whether the record exists or not.

Data cleansing

Weeding out unnecessary or unwanted things (characters and spaces etc) from incoming data to make it more meaningful and informative

Data merging

Data can be gathered from heterogeneous systems and put together

Data scrubbing

Data scrubbing is the process of fixing or eliminating individual pieces of data that are incorrect, incomplete or duplicated before the data is passed to end user.

Data scrubbing is aimed at more than eliminating errors and redundancy. The goal is also to bring consistency to various data sets that may have been created with different, incompatible business rules.

ODS (Operational Data Sources):

My understanding of ODS is, its a replica of OLTP system and so the need of this, is to reduce the burden on production system (OLTP) while fetching data for loading targets. Hence its a mandate Requirement for every Warehouse.

So every day do we transfer data to ODS from OLTP to keep it up to date?

OLTP is a sensitive database they should not allow multiple select statements it may impact the performance as well as if something goes wrong while fetching data from OLTP to data warehouse it will directly impact the business.

ODS is the replication of OLTP.

ODS is usually getting refreshed through some oracle jobs.

enables management to gain a consistent picture of the business.

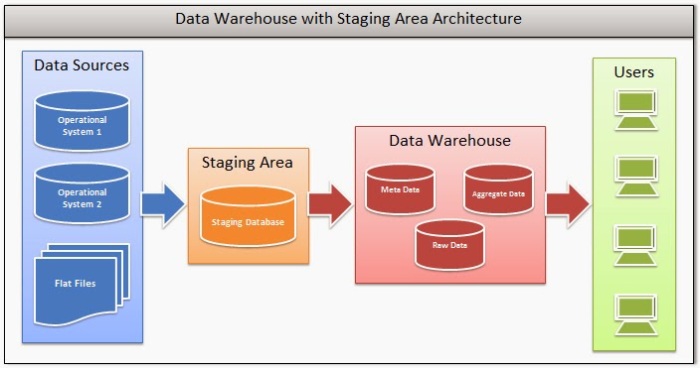

Image of Staging area: